Author: Elizaveta Lebedeva

Today Mark Zuckerberg gave testimony to Congress about data privacy practices in Facebook with regards to Cambridge Analytica scandal. Have you ever thought about how your personal data is used and for what purposes? Only one month is left before General Data Protection Regulation (GDPR) will come into force in European Union on the May 25, 2018. Awareness of legal regulation of data usage is an important part of any business and requires careful treatment. Usually, these are data scientists and data engineers who directly work with customers’ data in companies. They use data to predict clients behavior, recommend products and in the end to make profit. In this blog post, I cover most important points of the new Regulation and how they will influence Data Science workflow in organizations.

|

| Source: pixabay.com |

Firstly, what is exactly GDPR? This directive of the European Parliament was approved in 2016 was It constitutes of new set of rules designed to give more control to users on their personal data. Its goal is to make regulatory business environment simpler so that both clients and companies can benefit from ‘digital’ economy [1]. To be complied with regulation, organizations have to ensure the legal provenance of personal data and their protection from misuse and exploitation.

|

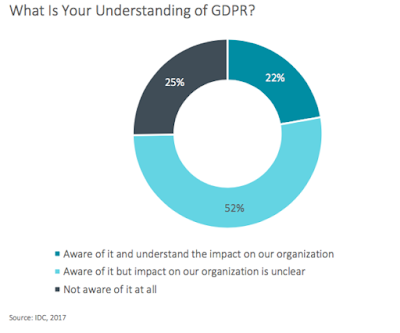

In 2017, only 22% of companies were aware and understood the impact of GDPR, while 25% were not aware at all. The most recent survey in that field (Cyber Security Breaches Survey 2018) showed that 38% of companies created or changed policies and procedures for the new regulation. Most probably, the closer the date of enforcement, the larger percentage of such companies should be. Indeed, the fines for non-compliance with regulation are determined as €20 Million or 4% of the organization’s annual turnover.

Now, let’s look at the most important directions of GDPR impact on Data Science. They can be summarized into three areas: data processing, explanation of automated decision-making results and prevention of bias and discrimination. Below, I describe them one by one.

1. Data Analysis starts with data collection and processing. GDPR will imply limits on consumer profiling and extend requirements for data management. Specifically, organizations can use client’s personal data if they can demonstrate business purposes that don’t violate rights and freedoms of a client. For example, banks can use personal data to prevent the money laundering or ensure the amount of available credit, but not for additional purpose without asking a permission from the customer. In terms of data science, it means less volume of information for exploratory analysis and constructing robust anonymization processes during data engineering.

2. Next area is the right for the explanation of automated decision-making results, which GDPR grants to consumers. This provision caused a lot of discussion and controversy. Some people say that it can limit the range of methods that data scientists apply in their work. The decisions based on some algorithms (especially in deep learning) may not be completely understandable and transparent (because of so-called ‘black box’ computation) that complicates their interpretation and explanation. However, the field of automated decisions is not identified by GDPR and can be defined as, for example, insurance, credit approvals, recruitment (as in paper of United Kingdom’s Information Commissioner’s Office [2]). In addition, the necessity of explanation may have an influence on decision engines rather than on the choice of methods for model training [3]. That’s why I think that one should not consider GDPR as a restriction measure for Data Science. Some people see it as a force against making analysis overcomplicated. After all, applying more interpretable algorithms sometimes can be much better idea.

3. In addition, GDPR forces organizations to account for discrimination or bias in automated decision-making. The outcome of the provision may be such that specific categories of data cannot be used for predictive and prescriptive analytics (like religion, sex, health status, etc). However, it can be considered as a positive influence on the quality of data science results. It can be a case that some data is not needed to build a more accurate model and it is sufficient to conduct analysis without it. Also, more efforts at data preprocessing step (which ensure limited access to personally identifiable information) will minimize the risk of error and bias later on. Implementation of strong anonymization procedure will protect personal data and prevent cases of privacy violation.

GDPR is applied not only to companies operating in EU, but also to any company whose clients are in EU. It means that such companies as Google and Facebook also should follow regulation. And they have already started to do it. For example, in January, Facebook announced its own privacy dashboard. Besides that, it intends to implement EU's data protection changes worldwide.

As we've seen, upcoming regulations in data protection area will affect data scientists' work. From the first glance, they can be seen as a threat and new limitation, but I would suggest considering it as new opportunities to improve and to perfect data discovery and data engineering which will allow to enhance customer experience, increase consumers' trust, enforce new clients and business offerings.

PS: I finish writing this post when on the testimony to Congress Mark Zuckerberg answers the question if privacy rules from Europe — the GDPR rules — should be applied in the U.S., and says that “everyone in the world deserves good privacy protections.” Our personal data is very valuable, and we should be aware where it is used and why.

References

- EU General Data Protection Regulation, http://www.privacy-regulation.eu/

- Bryce Goodman, Seth Flaxman, European Union regulations on algorithmic decision-making and a "right to explanation", ICML Workshop on Human Interpretability in Machine Learning (WHI 2016), New York, NY, 2016, https://arxiv.org/abs/1606.08813

- Cyber Security Breaches Survey 2018: Preparations for the new Data Protection Act, https://www.gov.uk/government/statistics/cyber-security-breaches-survey-2018-preparations-for-the-new-data-protection-act

[1] https://www.zdnet.com/article/gdpr-deadline-looms-but-businesses-still-arent-ready/

[2]https://ico.org.uk/media/for-organisations/documents/2013559/big-data-ai-ml-and-data-protection.pdf

[3] https://thomaswdinsmore.com/2017/07/17/how-gdpr-affects-data-science/

We live in the era when there is enough protected information. I work as a professional developer 14 years, I haven't seen any secured computer system ever.

ReplyDeleteI know how complicated system are and the complexity is increasing rapidly. We all know how easy to break complex multicomponet systems, especially if the human factor is involved. You can protect systems very hard but there are infinitely large number of possibilities how to disclosure them. The problem is fundamental. We made data easy to copy, we made data easy to access from any place in the world.

Those two factors are FUNDAMENTAL characteristics of data. Do you want to protect data with this characteristics?

No one will guarantee the protection any bit of your information which you ever put in digital world.

If someone would try to persuade you in safety your data in their systems (like FB), that means he/she is lier and highly possible he/she has a PURPOSE to persuade you...

The second threat is nowadays systems getting smarter and smarter. You can anonymize data, but if system has big amount data about you, it recognises you. We are all different and each of us has different set of features and usually produces data with their own way. Thus each can be guessed very easily. So, to correlate hash of user in anonymized data and real person not hard at all even at today's level of technology. Can you imagine tomorrow's level?

And third - neuronetworks is really "black box" and we don't know how big this box can be. It can contain information about everyone, and we all know that FB, Google and the major companies have close relationships with governments, at least with one - with US government. Google has all your location data (look at 'My Timeline' in Google Maps), all your search history in browser, nowadays it's equal your thoughts, Google Photos has all your photos and photos of people which you have met. FB and messengers know your conversations, this list can become endless. The guys from special agencies can process all this data and build enormous network and it's a perfect tool for various goals. Also till now we haven't seen using mass data processing against users, but it gonna be. All which it needs in place: total information about us, different kind of "bad guys" (including governments of different countries) who want to use this information and we are waiting not a miracle, but The CASE.

Amazing write-up! , i Request you to write more blogs like this Data Science Online course

ReplyDeleteGreat Article

ReplyDeleteData Mining Projects

Python Training in Chennai

Project Centers in Chennai

Python Training in Chennai